Are you developing a solution based on Artificial Intelligence or integrating AI into your processes, products, or services? Get ready, as you will need to comply with a new regulation, the AI Act.

France Digitale, the largest startup association in Europe, Gide, a leading international law firm, and Wavestone, assembled their forces to co-write this white paper to provide you with all the ins and outs of the new law on artificial intelligence.

The AI Act – What is its purpose?

The AI Act aims to ensure that artificial intelligence systems and models marketed within the European Union are used ethically, safely, and respectfully, adhering to the fundamental rights of the EU. It has also been written to strengthen the business AI competitiveness and innovation. It will reduce the risks of AI drifts, strengthening users’ confidence in its use and adoption.

Who needs to ensure compliance?

All providers*, distributors*, or deployers* of AI systems and models, legal entities (companies, foundations, associations, research laboratories, etc.), that have their registered office in the European Union, or are located outside the EU, who market their AI system or model within the European Union.

Are all AI systems and models subject to regulation?

The level of regulation and associated obligations depend on the risk level posed by the AI system or model. There are four risk levels and four levels of compliance:

Special obligations apply to generative AIs and the development of General Purpose AI (GPAI), with different regulations depending on whether the model is open source or not, and other subsidiary criteria (computing power, number of users, etc.).

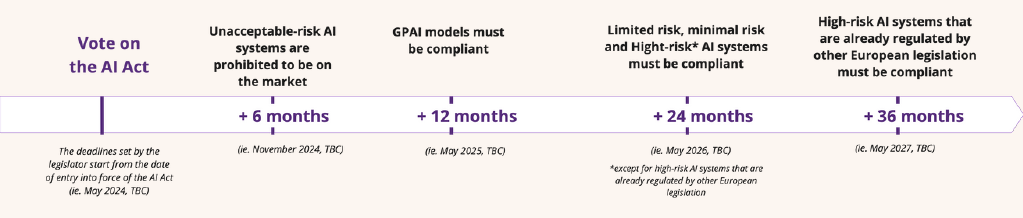

What is the compliance deadline?

Different deadlines, ranging from 6 to 36 months, apply depending on the risk level of AI systems and models. Regardless of the deadline, it is essential to be prepared and anticipate compliance, which may disrupt the tech, product, and legal roadmaps of companies.

While this progressive timeline has been designed by the regulator to give companies enough time to comply, it is crucial to anticipate the next steps.

How can Wavestone help your organization?

Wavestone is already supporting its clients in their compliance with the AI Act. This involves:

- Creating a mapping of their AI system to identify existing and future AI projects

- Defining the project team

- Estimating the budget for the AI project

- Estimating the budget for the associated compliance

- Assessing the risk level associated with different AI systems

- Testing these systems to ensure there are no algorithmic biases, among other considerations.

The goal is to obtain the CE marking to enable the product to be commercialized.

Chadi Hantouche

Partner, Wavestone

To achieve compliance, companies will have to carry out tests, provide the required documentation, interact with the planned governance, and follow the compliance process over time.

Here are 3 tips to help you get there: follow the step-by-step method we suggest in the guide, set up a dedicated team, and anticipate the budgetary impact on your company.